Martingale (probability theory)

In probability theory, a martingale is a stochastic process (i.e., a sequence of random variables) such that the conditional expected value of an observation at some time t, given all the observations up to some earlier time s, is equal to the observation at that earlier time s. A martingale is a model of a fair game. Precise definitions are given below.

Contents |

History

Originally, martingale referred to a class of betting strategies that was popular in 18th century France.[1] The simplest of these strategies was designed for a game in which the gambler wins his stake if a coin comes up heads and loses it if the coin comes up tails. The strategy had the gambler double his bet after every loss so that the first win would recover all previous losses plus win a profit equal to the original stake. As the gambler's wealth and available time jointly approach infinity, his probability of eventually flipping heads approaches 1, which makes the martingale betting strategy seem like a sure thing. However, the exponential growth of the bets eventually bankrupts its users.

The concept of martingale in probability theory was introduced by Paul Pierre Lévy, and much of the original development of the theory was done by Joseph Leo Doob among others. Part of the motivation for that work was to show the impossibility of successful betting strategies.

Definitions

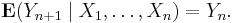

A discrete-time martingale is a discrete-time stochastic process (i.e., a sequence of random variables) X1, X2, X3, ... that satisfies for all n

i.e., the conditional expected value of the next observation, given all the past observations, is equal to the last observation.

Somewhat more generally, a sequence Y1, Y2, Y3 ... is said to be a martingale with respect to another sequence X1, X2, X3 ... if for all n

Similarly, a continuous-time martingale with respect to the stochastic process Xt is a stochastic process Yt such that for all t

This expresses the property that the conditional expectation of an observation at time t, given all the observations up to time  , is equal to the observation at time s (of course, provided that s ≤ t).

, is equal to the observation at time s (of course, provided that s ≤ t).

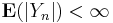

In full generality, a stochastic process Y : T × Ω → S is a martingale with respect to a filtration Σ∗ and probability measure P if

- Σ∗ is a filtration of the underlying probability space (Ω, Σ, P);

- Y is adapted to the filtration Σ∗, i.e., for each t in the index set T, the random variable Yt is a Σt-measurable function;

- for each t, Yt lies in the Lp space L1(Ω, Σt, P; S), i.e.

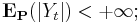

- for all s and t with s < t and all F ∈ Σs,

-

- where χF denotes the indicator function of the event F. In Grimmett and Stirzaker's Probability and Random Processes, this last condition is denoted as

- which is a general form of conditional expectation.[2]

It is important to note that the property of being a martingale involves both the filtration and the probability measure (with respect to which the expectations are taken). It is possible that Y could be a martingale with respect to one measure but not another one; the Girsanov theorem offers a way to find a measure with respect to which an Itō process is a martingale.

Examples of martingales

- Suppose Xn is a gambler's fortune after n tosses of a fair coin, where the gambler wins $1 if the coin comes up heads and loses $1 if the coin comes up tails. The gambler's conditional expected fortune after the next trial, given the history, is equal to his present fortune, so this sequence is a martingale. This is also known as D'Alembert system.

- Let Yn = Xn2 − n where Xn is the gambler's fortune from the preceding example. Then the sequence { Yn : n = 1, 2, 3, ... } is a martingale. This can be used to show that the gambler's total gain or loss varies roughly between plus or minus the square root of the number of steps.

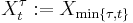

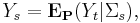

- (de Moivre's martingale) Now suppose an "unfair" or "biased" coin, with probability p of "heads" and probability q = 1 − p of "tails". Let

-

- with "+" in case of "heads" and "−" in case of "tails". Let

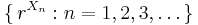

- Then { Yn : n = 1, 2, 3, ... } is a martingale with respect to { Xn : n = 1, 2, 3, ... }. To show this

- (Polya's urn) An urn initially contains r red and b blue marbles. One is chosen randomly. Then it is put back in the urn along with another marble of the same colour. Let Xn be the number of red marbles in the urn after n iterations of this procedure, and let Yn = Xn/(n + r + b). Then the sequence { Yn : n = 1, 2, 3, ... } is a martingale.

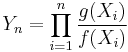

- (Likelihood-ratio testing in statistics) A population is thought to be distributed according to either a probability density f or another probability density g. A random sample is taken, the data being X1, ..., Xn. Let Yn be the "likelihood ratio"

- (which, in applications, would be used as a test statistic). If the population is actually distributed according to the density f rather than according to g, then { Yn : n = 1, 2, 3, ... } is a martingale with respect to { Xn : n = 1, 2, 3, ... }.

- Suppose each amoeba either splits into two amoebas, with probability p, or eventually dies, with probability 1 − p. Let Xn be the number of amoebas surviving in the nth generation (in particular Xn = 0 if the population has become extinct by that time). Let r be the probability of eventual extinction. (Finding r as function of p is an instructive exercise. Hint: The probability that the descendants of an amoeba eventually die out is equal to the probability that either of its immediate offspring dies out, given that the original amoeba has split.) Then

- is a martingale with respect to { Xn: n = 1, 2, 3, ... }.

- The number of individuals of any particular species in an ecosystem of fixed size is a function of (discrete) time, and may be viewed as a sequence of random variables. This sequence is a martingale under the unified neutral theory of biodiversity.

- If { Nt : t ≥ 0 } is a Poisson process with intensity λ, then the Compensated Poisson process { Nt − λt : t ≥ 0 } is a continuous-time martingale with right-continuous/left-limit sample paths.

- An example martingale series can easily be produced with computer software:

-

- Microsoft Excel or similar spreadsheet software. Enter 0.0 in the A1 (top left) cell, and in the cell below it (A2) enter

=A1+NORMINV(RAND(),0,1). Now copy that cell by dragging down to create 300 or so copies. This will create a martingale series with a mean of 0 and standard deviation of 1. With the cells still highlighted go to the chart creation tool and create a chart of these values. Now every time a recalculation happens (in Excel the F9 key does this) the chart will display another martingale series. - R. To recreate the example above, issue

plot(cumsum(rnorm(100, mean=0, sd=1)), t="l", col="darkblue", lwd=3).To display another martingale series, reissue the command.

- Microsoft Excel or similar spreadsheet software. Enter 0.0 in the A1 (top left) cell, and in the cell below it (A2) enter

Submartingales and supermartingales

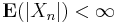

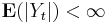

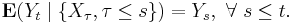

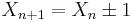

A (discrete-time) submartingale is a sequence  of integrable random variables satisfying

of integrable random variables satisfying

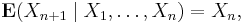

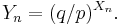

Analogously a (discrete-time) supermartingale satisfies

The more general definitions of both discrete-time and continuous-time martingales given earlier can be converted into the corresponding definitions of sub/supermartingales in the same way by replacing the equality for the conditional expectation by an inequality.

Here is a mnemonic for remembering which is which: "Life is a supermartingale; as time advances, expectation decreases."

Examples of submartingales and supermartingales

- Every martingale is also a submartingale and a supermartingale. Conversely, any stochastic process that is both a submartingale and a supermartingale is a martingale.

- Consider again the gambler who wins $1 when a coin comes up heads and loses $1 when the coin comes up tails. Suppose now that the coin may be biased, so that it comes up heads with probability p.

- If p is equal to 1/2, the gambler on average neither wins nor loses money, and the gambler's fortune over time is a martingale.

- If p is less than 1/2, the gambler loses money on average, and the gambler's fortune over time is a supermartingale.

- If p is greater than 1/2, the gambler wins money on average, and the gambler's fortune over time is a submartingale.

- A convex function of a martingale is a submartingale, by Jensen's inequality. For example, the square of the gambler's fortune in the fair coin game is a submartingale (which also follows from the fact that Xn2 − n is a martingale). Similarly, a concave function of a martingale is a supermartingale.

Martingales and stopping times

A stopping time with respect to a sequence of random variables X1, X2, X3, ... is a random variable τ with the property that for each t, the occurrence or non-occurrence of the event τ = t depends only on the values of X1, X2, X3, ..., Xt. The intuition behind the definition is that at any particular time t, you can look at the sequence so far and tell if it is time to stop. An example in real life might be the time at which a gambler leaves the gambling table, which might be a function of his previous winnings (for example, he might leave only when he goes broke), but he can't choose to go or stay based on the outcome of games that haven't been played yet.

Some mathematicians defined the concept of stopping time by requiring only that the occurrence or non-occurrence of the event τ = t be probabilistically independent of Xt + 1, Xt + 2, ... but not that it be completely determined by the history of the process up to time t. That is a weaker condition than the one appearing in the paragraph above, but is strong enough to serve in some of the proofs in which stopping times are used.

One of the basic properties of martingales is that if  is a (sub-/super-) martingale and

is a (sub-/super-) martingale and  is a stopping time, then the corresponding stopped process

is a stopping time, then the corresponding stopped process  defined by

defined by  is also a (sub-/super-) martingale.

is also a (sub-/super-) martingale.

The concept of a stopped martingale leads to a series of important theorems. Eg. the optional stopping theorem (or optional sampling theorem), that says, under certain conditions, that the expected value of a martingale at a stopping time is equal to its initial value. We can use it, for example, to prove the impossibility of successful betting strategies for a gambler with a finite lifetime and a house limit on bets.

See also

- Azuma's inequality

- Brownian motion

- Martingale central limit theorem

- Martingale representation theorem

- Doob martingale

- Doob's martingale convergence theorems

- Backwards martingale convergence theorem

- Local martingale

- Semimartingale

Notes

- ↑ N. J. Balsara, Money Management Strategies for Futures Traders, Wiley Finance, 1992, ISBN 0-47-152215-5 pp. 122

- ↑ G. Grimmett and D. Stirzaker, Probability and Random Processes, 3rd edition, Oxford University Press, 2001, ISBN 0-19-857223-9

References

- The Splendors and Miseries of Martingales, Electronic Journal for History of Probability and Statistics, 5,1. June 2009; accessible online website of the journal

- David Williams, Probability with Martingales, Cambridge University Press, 1991, ISBN 0-521-40605-6

- Hagen Kleinert, Path Integrals in Quantum Mechanics, Statistics, Polymer Physics, and Financial Markets, 4th edition, World Scientific (Singapore, 2004); Paperback ISBN 981-238-107-4 (also available online: PDF-files)

- Presentation about martingales available here

![\mathbf{E}_{\mathbf{P}} \left([Y_t-Y_s]\chi_F\right)=0,](/2010-wikipedia_en_wp1-0.8_orig_2010-12/I/9a70e8a3b3451cfd6459f386323e8fb5.png)

![\begin{align}

E[Y_{n+1} \mid X_1,\dots,X_n] & = p (q/p)^{X_n+1} + q (q/p)^{X_n-1} \\

& = p (q/p) (q/p)^{X_n} + q (p/q) (q/p)^{X_n} \\

& = q (q/p)^{X_n} + p (q/p)^{X_n} = (q/p)^{X_n}=Y_n.

\end{align}](/2010-wikipedia_en_wp1-0.8_orig_2010-12/I/b58355bd51ec21df24d8bc162046aab4.png)

![{}E[X_{n+1}|X_1,\ldots,X_n] \ge X_n.](/2010-wikipedia_en_wp1-0.8_orig_2010-12/I/eddd6a848d405b6518dd7af83464b691.png)

![{}E[X_{n+1}|X_1,\ldots,X_n] \le X_n.](/2010-wikipedia_en_wp1-0.8_orig_2010-12/I/fcd7050dc923445bc538bac0dfbd940a.png)